Model Context Protocol (MCP): 10 Must-Try MCP Servers for Developers

Aug'25 - Issue #63

Welcome to the AI Insights tribe. Join others who are receiving high-signal stories from the AI world. In case you missed it, Subscribe to our newsletter.Today’s newsletter is brought to you by The Rundown AI!

Get the latest AI news, understand why it matters, and learn how to apply it in your work. Join 1,000,000+ readers from companies like Apple, OpenAI, NASA.

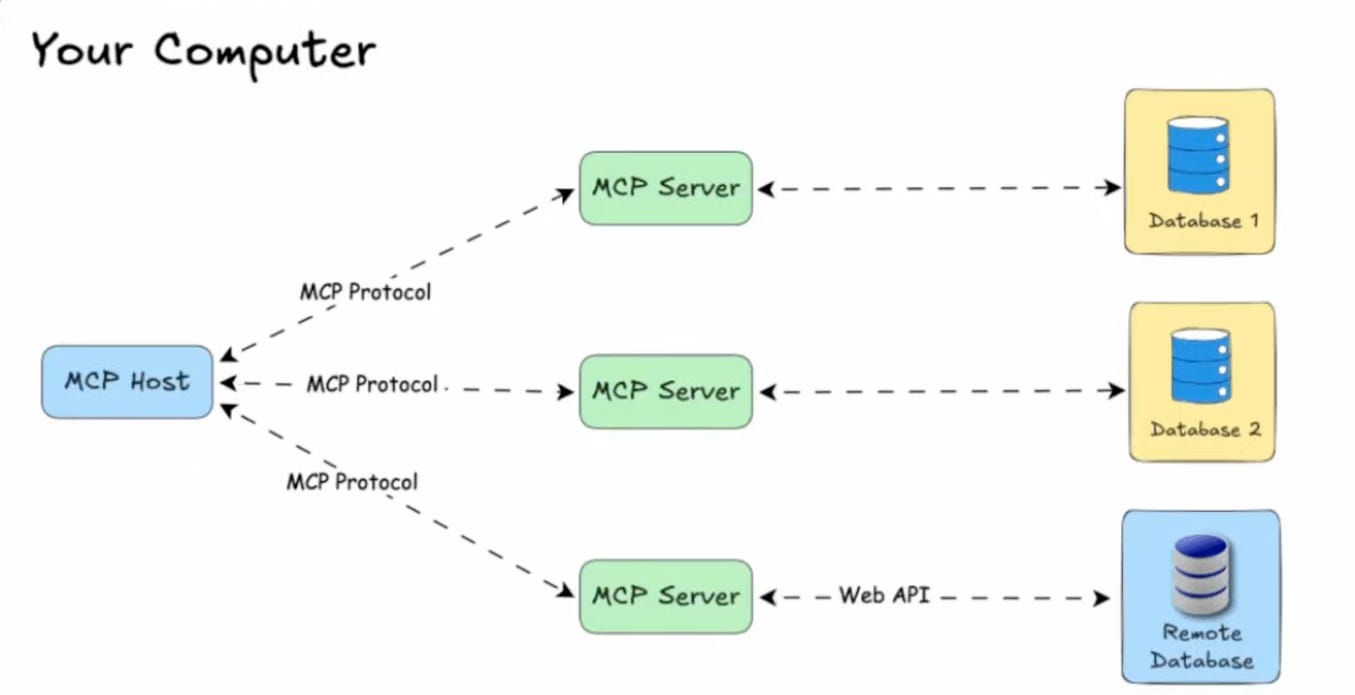

Let’s explore Model Context Protocol (MCP) — a powerful way to connect tools, data, and models. MCP lets you run servers that expose capabilities like search, file operations, or custom APIs, and make them instantly available to your agents or AI apps.

Understanding → Model Context Protocol(MCP)

MCP (Model Context Protocol) is a standardized way to let AI models interact with tools, APIs, and data in real time. Since its launch by Anthropic in Nov 2024, MCP adoption has grown quickly - with many developers and companies building their own servers.

Why it matters:

Standardization: Clear, structured communication between models and tools.

Flexibility: Works with any LLM or AI agent.

Scalability: Client-server architecture supports multiple integrations from a single host.

Extensibility: Add custom servers for search, file ops, APIs, or any domain-specific task.

We’ve curated 10 MCP servers you should check out - perfect for developers building powerful AI workflows.

You can learn more via video tutorial as well

How to use MCP Server in Cursor?

We’ll be taking a look at using MCP servers with Cursor, and the process will be similar for each tool.

Open

Cursor Settings→ go toTools and IntegrationsClick on

New MCP Server, which will open anmcp.jsonPaste the code into the “

mcp.json”file:

1. GitHub MCP Server

The GitHub MCP server allows your AI to interact directly with GitHub repositories, issues, pull requests, and more. With this server, you can automate repository management, code reviews, and project tracking seamlessly.

To use GitHub MCP Server in Cursor, Paste the following code into the “mcp.json” file:

{

"mcpServers": {

"github": {

"url": "<https://api.githubcopilot.com/mcp/>",

"headers": {

"Authorization": "Bearer YOUR_GITHUB_PAT"

}

}

}

}Example Query:

“List my GitHub repositories”and Cursor will fetch them for you using GitHub MCP Server.

2. BrightData MCP Server

BrightData MCP server provides a powerful all-in-one solution for public web access and data scraping. With this server, your AI can access real-time web data, scrape websites, and gather information from across the internet.

How to use BrightData MCP Server in Claude Desktop

Open Claude Desktop.

Go to

Settings→Developer→Edit Config.Add the following to your

claude_desktop_config.json:

{

"mcpServers": {

"Bright Data": {

"command": "npx",

"args": [

"mcp-remote",

"<https://mcp.brightdata.com/mcp?token=YOUR_API_TOKEN_HERE>"

]

}

}

}Example Query:

“

What’s Tesla’s current market cap?”. BrightData will be able to fetch the current data and respond to your question.

3. GibsonAI MCP Server

GibsonAI MCP Server is the only MCP Server you need in order to design, deploy, manage and scale serverless SQL databases instantly from your favourite IDE. It provides full, real-time context to your AI, so it knows your schema, API endpoints, and environment, producing accurate, ready-to-use code instead of generic guesses.

How to use GibsonAI MCP Server in Cursor

Prerequisites

You need to create a GibsonAI account

Then install UV

You'll need to ensure you're logged in to the Gibson CLI before the MCP server will work, run the code below in your terminal and authenticate your account

uvx --from gibson-cli@latest gibson auth loginTo use GibsonAI MCP Server in Cursor, Paste the following code into the “mcp.json” file:

{

"mcpServers": {

"gibson": {

"command": "uvx",

"args": ["--from", "gibson-cli@latest", "gibson", "mcp", "run"]

}

}

}Example Query:

“Create an e-commerce database for my clothing brand”and watch Cursor create the database for you using GibsonAI MCP Server. You can also tell it to deploy the database and watch it deploy it for you, providing you with the connection URL

4. Notion MCP Server

The official Notion MCP server enables AI assistants to interact with Notion's API, providing a complete set of tools for workspace management. Your AI can create, read, update, and manage Notion pages, databases, and workspaces.

How to Use Notion MCP Server in Cursor

Create a Notion Integration

Go to Notion Integrations.

Click

New Integration(or select an existing one).Grant it Read, Write, Update, or Insert access to the pages you want:

Click

Access→ select the page(s) → click Update Access.

Copy your Internal Integration Secret.

To use Notion MCP Server in Cursor, Paste the following code into the “mcp.json” file:

{

"mcpServers": {

"notionApi": {

"command": "npx",

"args": ["-y", "@notionhq/notion-mcp-server"],

"env": {

"NOTION_TOKEN": "ntn_****"

}

}

}

}Replace ntn_**** with your actual Notion Internal Integration Secret.

Example Query:

“

Create a new Notion page titled ‘Weekly Report’ with today’s date and a checklist for tasks.”

5. Docker Hub MCP Server

Docker has created their own MCP server implementation that allows AI agents to interact with Docker containers, images, and orchestration. This server enables your AI to manage containerized applications, deploy services, and handle DevOps tasks. It's a game-changer for developers who want to automate their container workflows.

To use DockerHub MCP Server in Cursor, Paste the following code into the “mcp.json” file:

{

"mcpServers": {

"dockerhub": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"HUB_PAT_TOKEN",

"mcp/dockerhub@sha256:b3a124cc092a2eb24b3cad69d9ea0f157581762d993d599533b1802740b2c262",

"--transport=stdio",

"--username={{dockerhub.username}}"

],

"env": {

"HUB_PAT_TOKEN": "your_hub_pat_token"

}

}

}

}Why It's Worth It:

Smart DevOps automation - Diagnose issues, optimize resources, and handle deployments intelligently

Skip the command memorization - Just describe what you want, AI handles the Docker complexity

Consistent dev environments - Spin up and manage development setups effortlessly

Read Anthropic launch blog to get more insights: https://www.anthropic.com/news/model-context-protocol

6. Browserbase MCP Server

The Browserbase MCP server allows LLMs to control a browser with Browserbase and Stagehand. This gives your AI the ability to navigate websites, fill forms, click buttons, and perform web automation tasks just like a human would. It's incredibly powerful for testing web applications, automating repetitive web tasks, or gathering data that requires interaction with dynamic web pages.

Why It's Worth It:

Handle complex web tasks - Fill forms, navigate dynamic sites, and bypass traditional scraping limitations

Smart testing automation - Adapt to UI changes and test user flows like a real person would

Eliminate repetitive tasks - Automate booking, monitoring, and other browser-based work

7. Context7 MCP Server

Context7 is a game-changer for developers tired of outdated code examples and hallucinated APIs from AI assistants. With Context7, your AI pulls up-to-date, version-specific docs and working code straight from the source with no tab-switching, no fake APIs, no outdated snippets. Just add "use context7" to your prompt in Cursor and get accurate, current code that works with today's library versions.

To use Context7 MCP Server in Cursor, Paste the following code into the “mcp.json” file:

{

"mcpServers": {

"context7": {

"url": "<https://mcp.context7.com/mcp>"

}

}

}Example Query:

“

Create a Next.js middleware that checks for a valid JWT in cookies and redirects unauthenticated users to /login. Use Context7.” It will fetch the latest docs and use it as context for writing the code.

8. Figma MCP Server

The Figma MCP server allows you to integrate with Figma APIs through function calling, supporting various design operations. Your AI can handle design file access, component extraction, and layout analysis seamlessly. This is particularly useful for design-to-code workflows and automated UI implementation systems.

To use Figma MCP Server in Cursor, Paste the following code into the “mcp.json” file:

{

"mcpServers": {

"Framelink Figma MCP": {

"command": "npx",

"args": ["-y", "figma-developer-mcp", "--figma-api-key=YOUR-KEY", "--stdio"]

}

}

}Convert Figma designs to code accurately without manual interpretation.

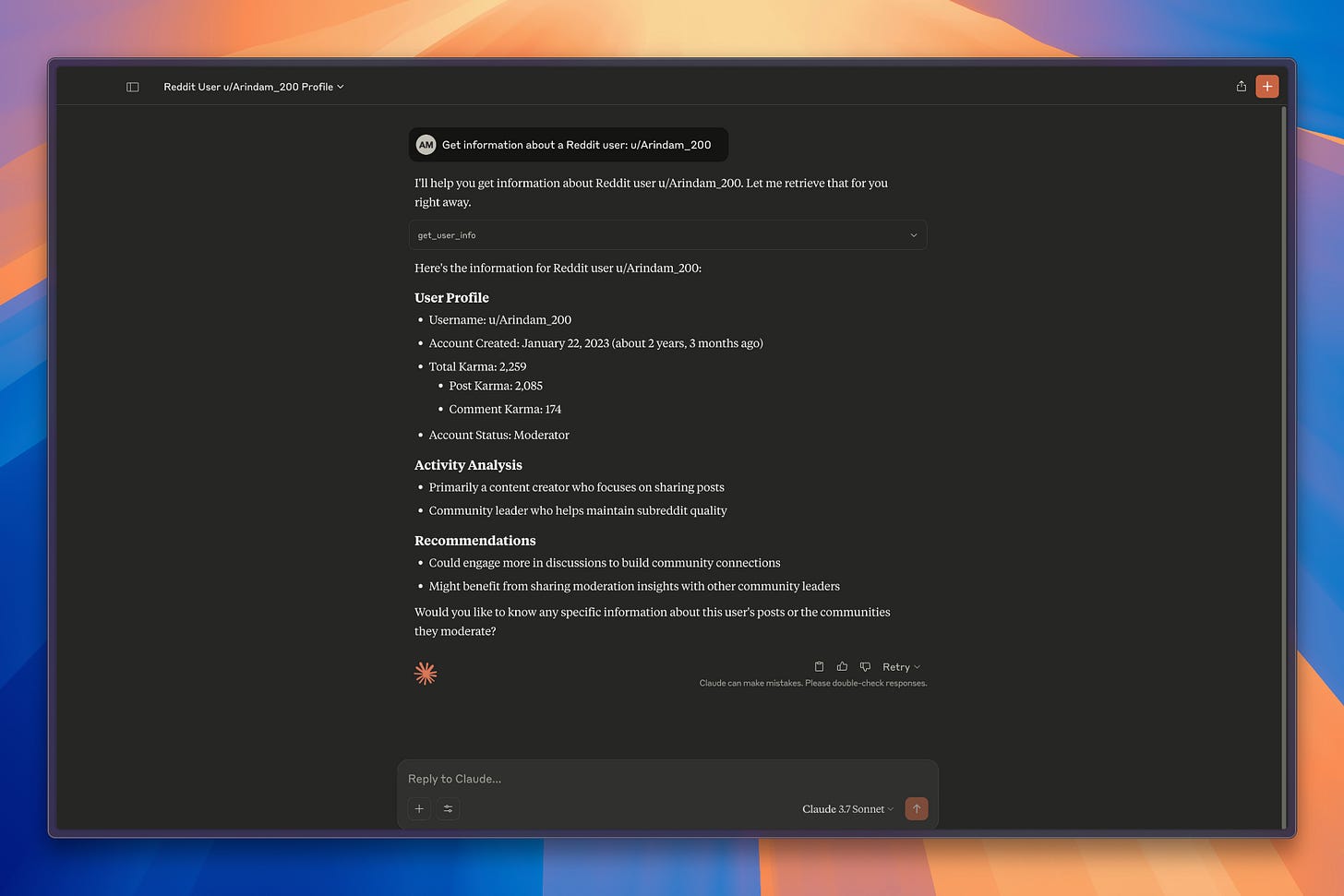

9. Reddit MCP Server

This Reddit MCP Server implementation that lets AI assistants fetch, analyze, and interact with Reddit content using PRAW(Python Reddit API Wrapper), complete with user insights, subreddit stats, and AI-driven insights & recommendations.

10. Sequential Thinking MCP Server

Sequential Thinking is one of the most popular MCP servers, providing tools for dynamic and structured reasoning. This server enhances your AI's ability to think through complex problems step by step, making it perfect for tasks that require logical reasoning, planning, and structured problem-solving.

How to use Sequential Thinking MCP Server in Claude Desktop

Open Claude Desktop.

Go to

Settings→Developer.Click Edit Config to open

claude_desktop_config.json.Add the following to your

claude_desktop_config.jsonfile and save the file:

{

"mcpServers": {

"sequential-thinking": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-sequential-thinking"

]

}

}

}Restart Claude Desktop.

Example Query:

“

Plan a migration from Flask to FastAPI: inventory routes, map dependencies, rewrite patterns, testing strategy, rollout plan, and rollback triggers.”

Wrap-Up

MCP makes it dead simple to plug real-world tools into your AI. In short, you can:

✅ Set up your first MCP server

✅ Wire it into your client/host and test quickly

✅ Add more servers to expand capabilities

✅ Ship a repeatable, production-ready workflow

👉 Explore more about MCP: Official Website

👉 Follow our Newsletter on X: Daily AI Insights

This Week in AI

WebWatcher - Alibaba open-sourced their vision-language deep research agent. Available in 7B & 32B parameter scales for the community.

Novix - It is a PhD-level AI scientist for autonomous discovery — handling deep research, idea generation, coding, data analysis, experiments, and paper writing.

Grok Code Fast 1 - xAI launched new cheaper models for agentic coding.

Nano-Banana - Google launched gemini-2.5-flash-image-preview, SOTA image generation and editing model.

Hermes 4 - Nous Research launched Hermes 4 with expanded test-time compute capabilities. You can try it out on Nebius.

Sukriya🙏🏼 See You Again Next Week! Till Then Keep Learning and Sharing Knowledge with Your Network

Great List!